SpeedFolding

Learning Efficient Bimanual Folding of Garments

Yahav Avigal*,1, Lars Berscheid*,1,2, Tamim Asfour2, Torsten Kröger2, Ken Goldberg1

1UC Berkeley 2Karlsruhe Institute of Technology (KIT) *equal contribution

Yahav Avigal*,1, Lars Berscheid*,1,2, Tamim Asfour2, Torsten Kröger2, Ken Goldberg1

1UC Berkeley 2Karlsruhe Institute of Technology (KIT) *equal contribution

Folding garments reliably and efficiently is a long standing challenge in robotic manipulation. An intuitive approach is to initially manipulate the garment to a canonical smooth configuration before folding, a task that has proven to be challenging due to the complex dynamics and high dimensional configuration space of garments. In this work, we develop SpeedFolding, a reliable and efficient bimanual system to manipulate an initially crumpled garment to (1) a smooth and (2) a subsequent folded configuration following user-defined instructions given as folding lines. Our primary contribution is a novel neural network architecture that is able to predict two corresponding poses to parameterize a diverse set of bimanual action primitives. After learning from 4300 human-annotated or self-supervised actions, the robot is able to fold garments from a random initial configuration in under 120s on average with a success rate of 93%. Real-world experiments show that the system is able to generalize to unseen garments of different color, shape, and stiffness. SpeedFolding decreases the folding time by over 30% in comparison to baselines, and outperforms prior works requiring 10 to 20min per fold by 5-10x.

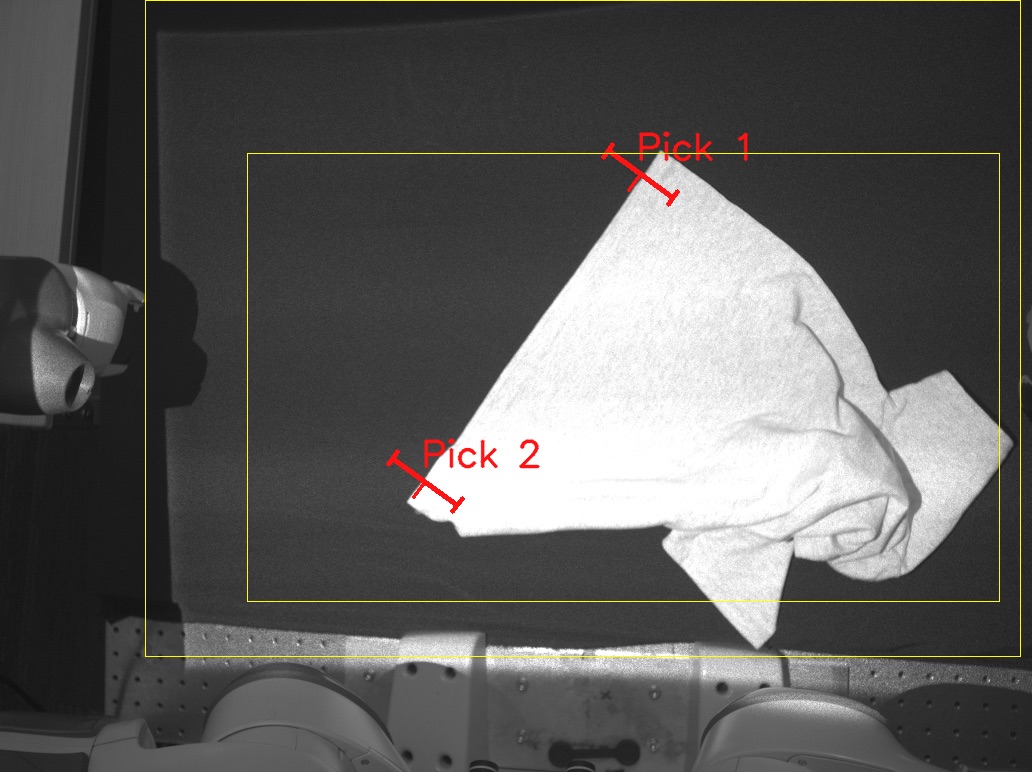

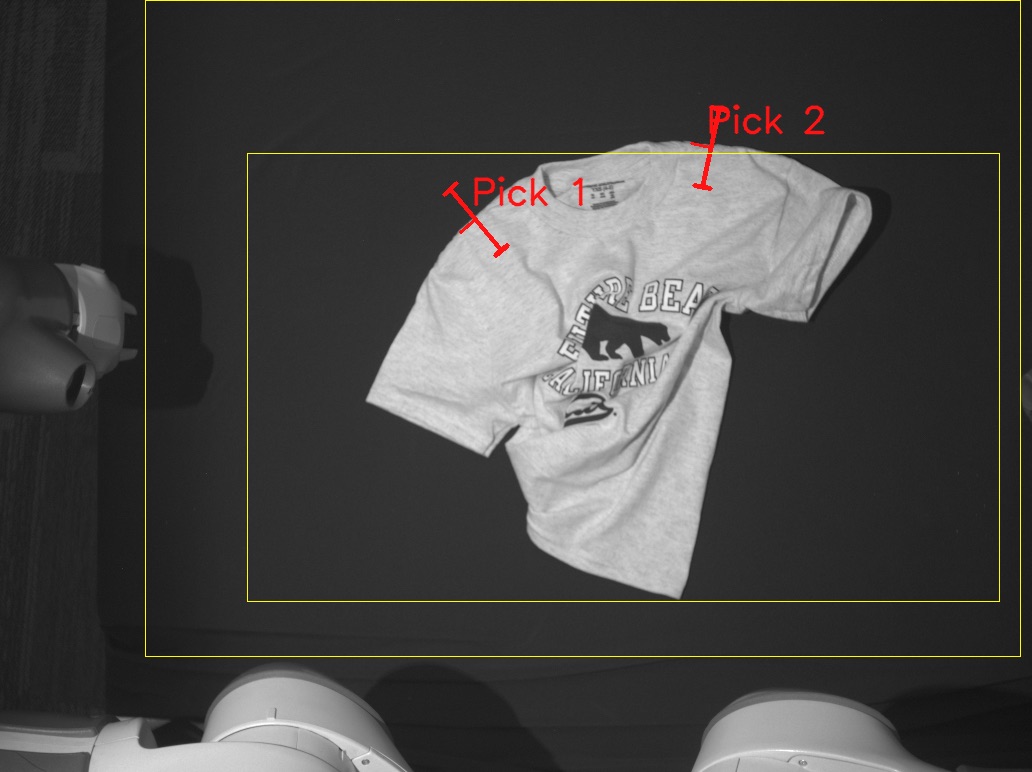

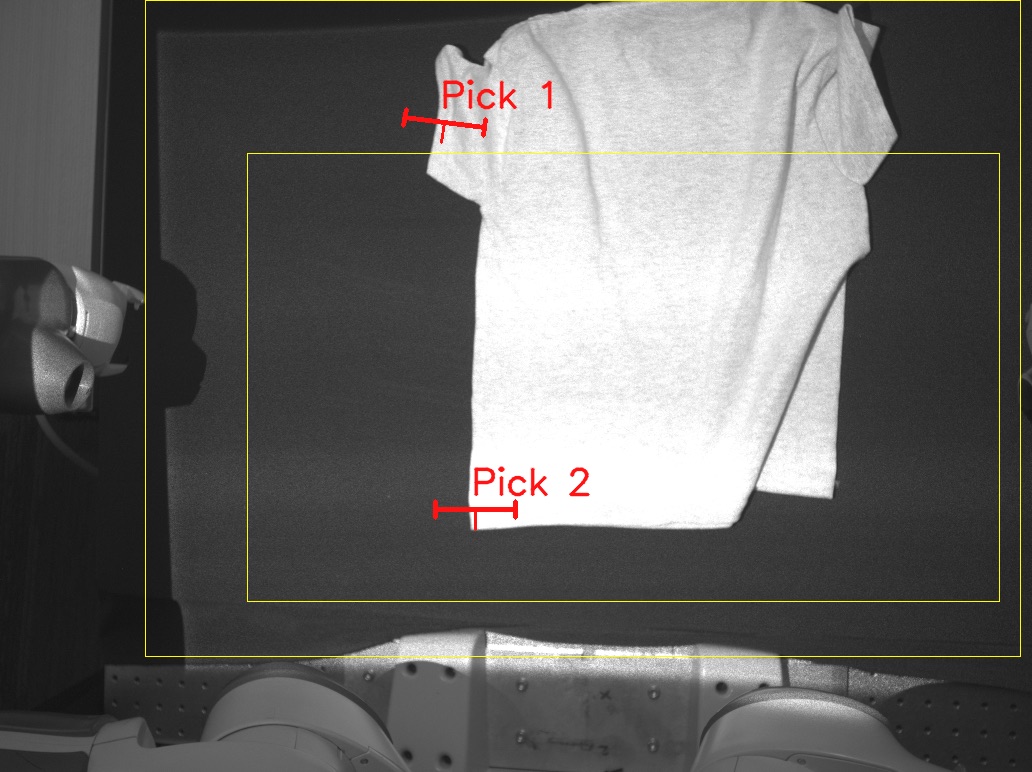

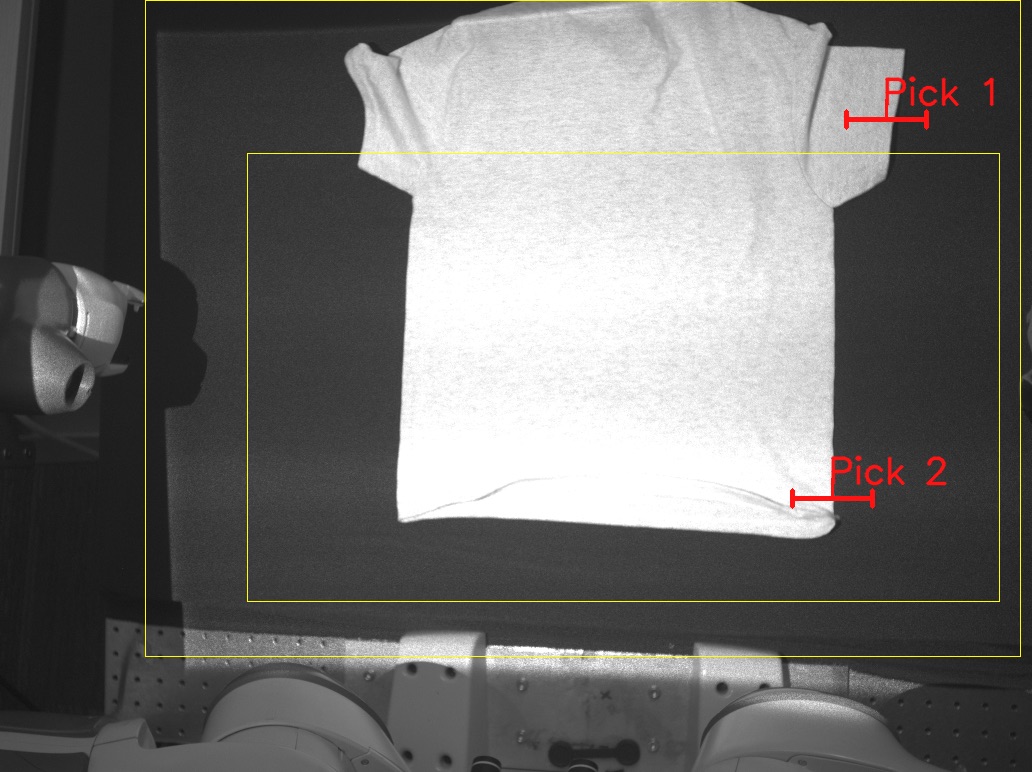

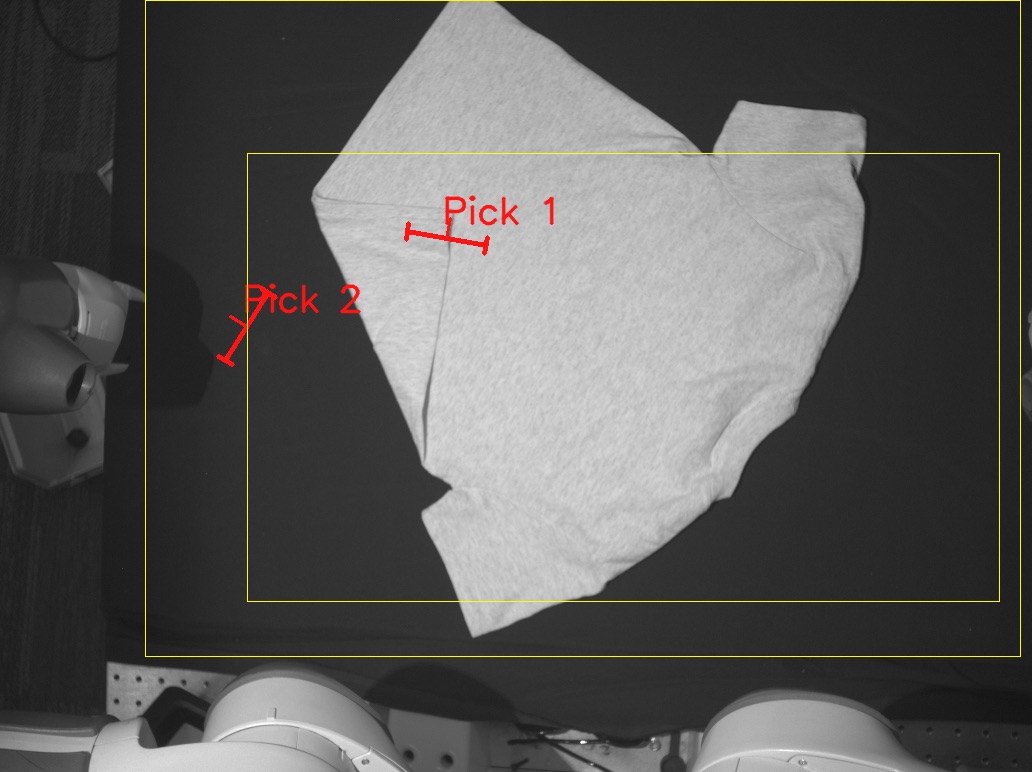

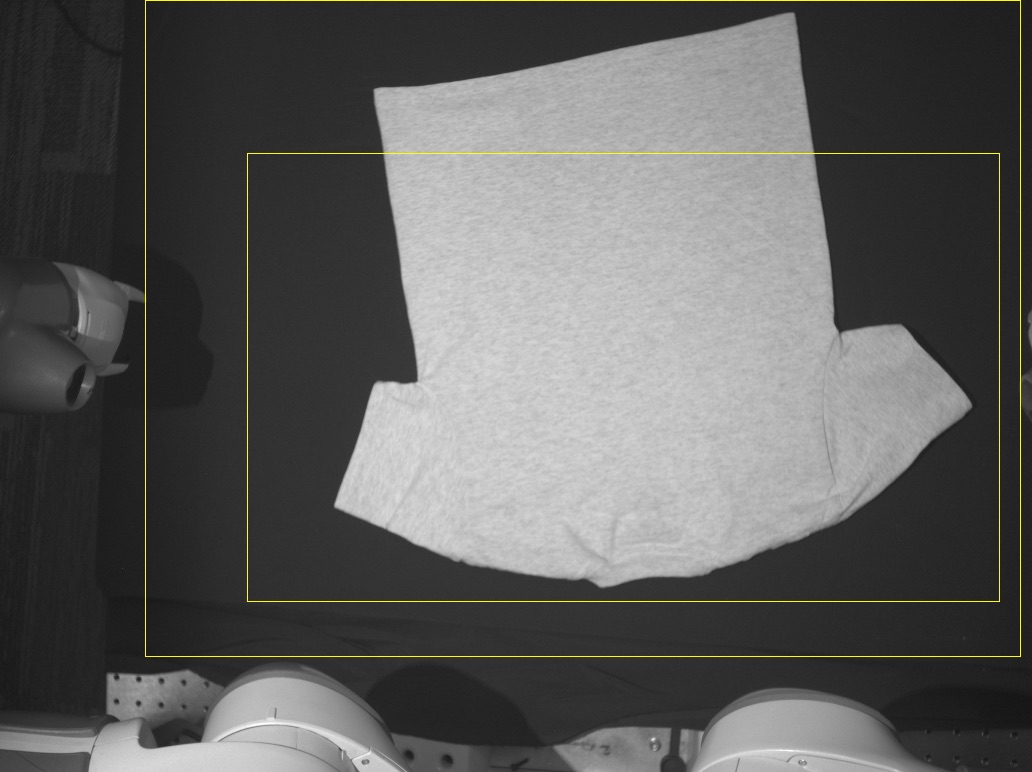

We define a set of manipulation primitives to smooth and then fold a garment from an arbitrary initial state. Each primitive is parametrized by their pick or place poses, which are either learned or calculated by a heuristic. In particular, we learn (1) the two pick poses for a fling primitive, (2) two pick poses for a drag primitive, and (3) the corresponding poses for a pick-and-place primitive. For predicting these, we introduce the novel Bimama-Net architecture for BiManual Manipulation.

Primitives for Smoothing

Fling Primitive

Drag Primitive

Pick-and-place Primitive

To scale the self-supervised training to over 2000 actions, the robot needs to collect data with as little human intervention as possible. The timelapse video below shows the robot interacting with the garment over a duration of around 40min, performing the set of primitives above to learn smoothing. The collected data is available below.

Timelapse (60x)

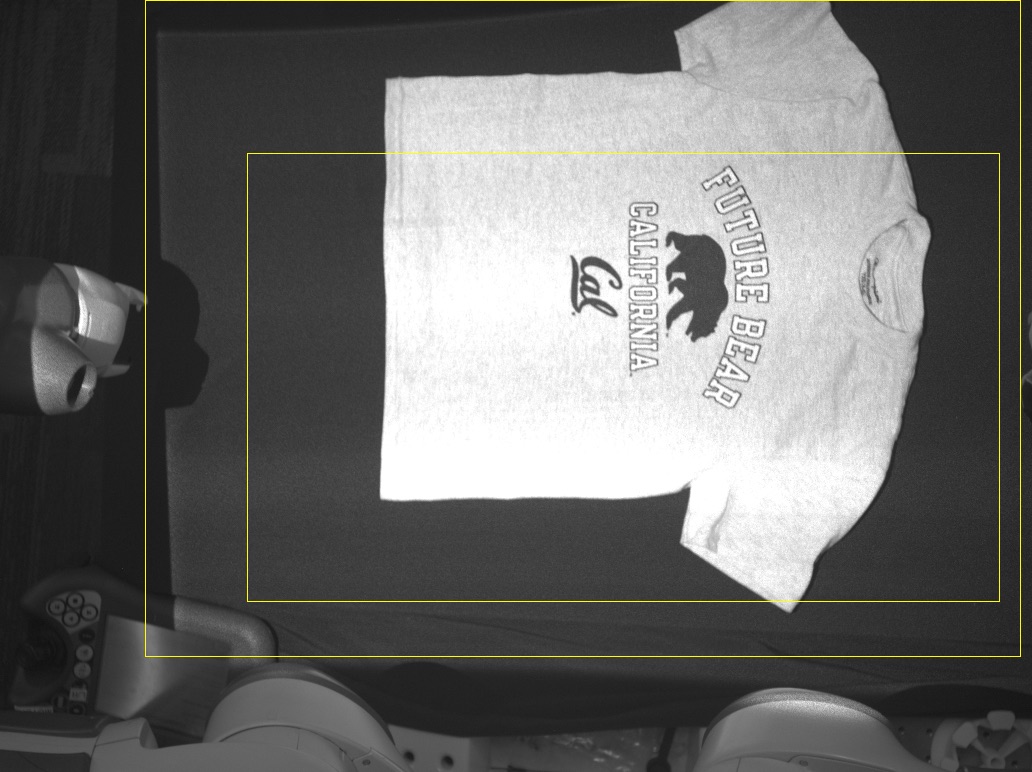

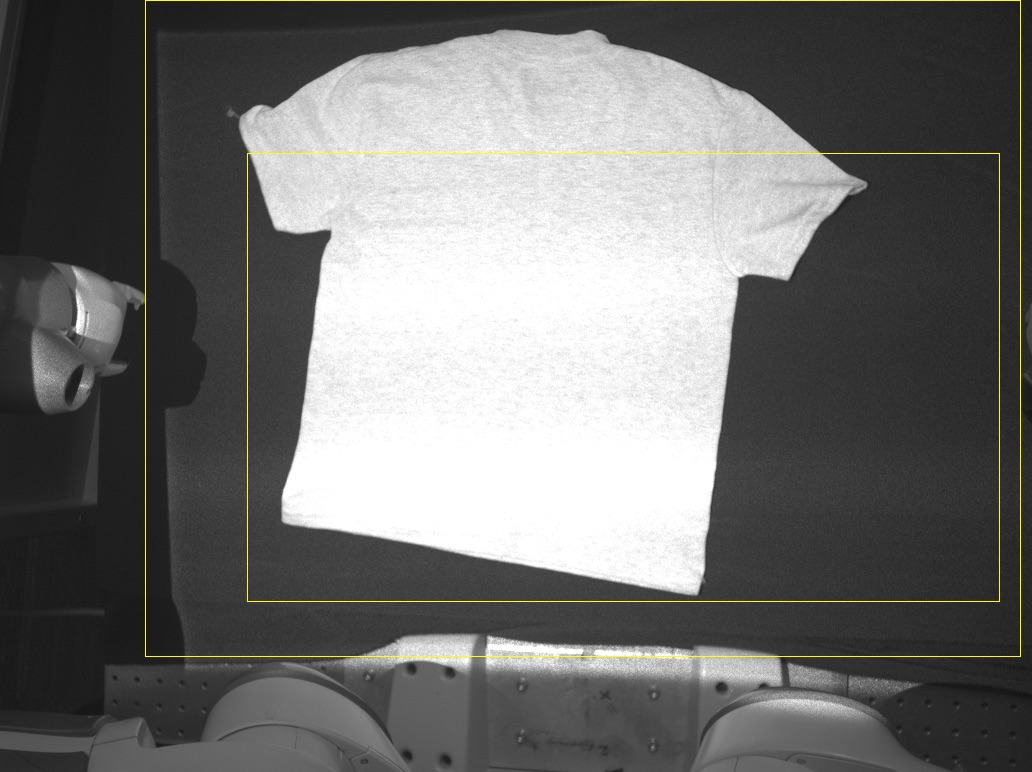

Below, example videos show the end-to-end folding from an arbitrary initial configuration of the t-shirt. On average, SpeedFolding takes less than 120s while achieving a success rate of 93%, significantly outperforming prior works requiring 10 to 20min per fold.

1 / 5

2 / 5

3 / 5

4 / 5

5 / 5

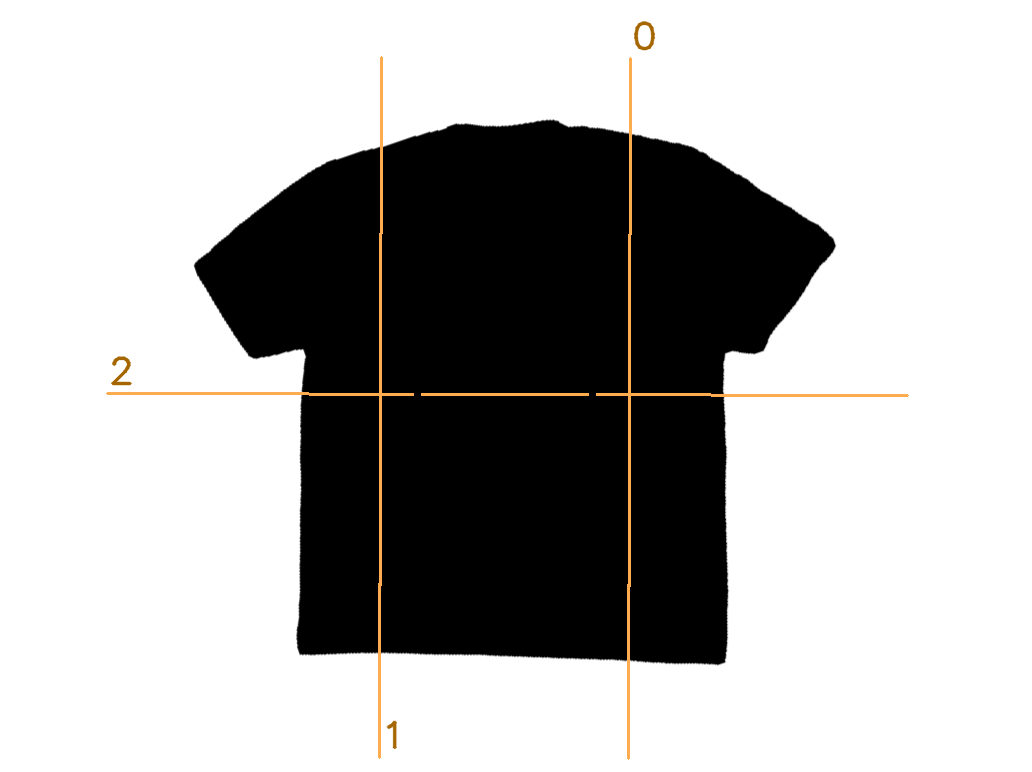

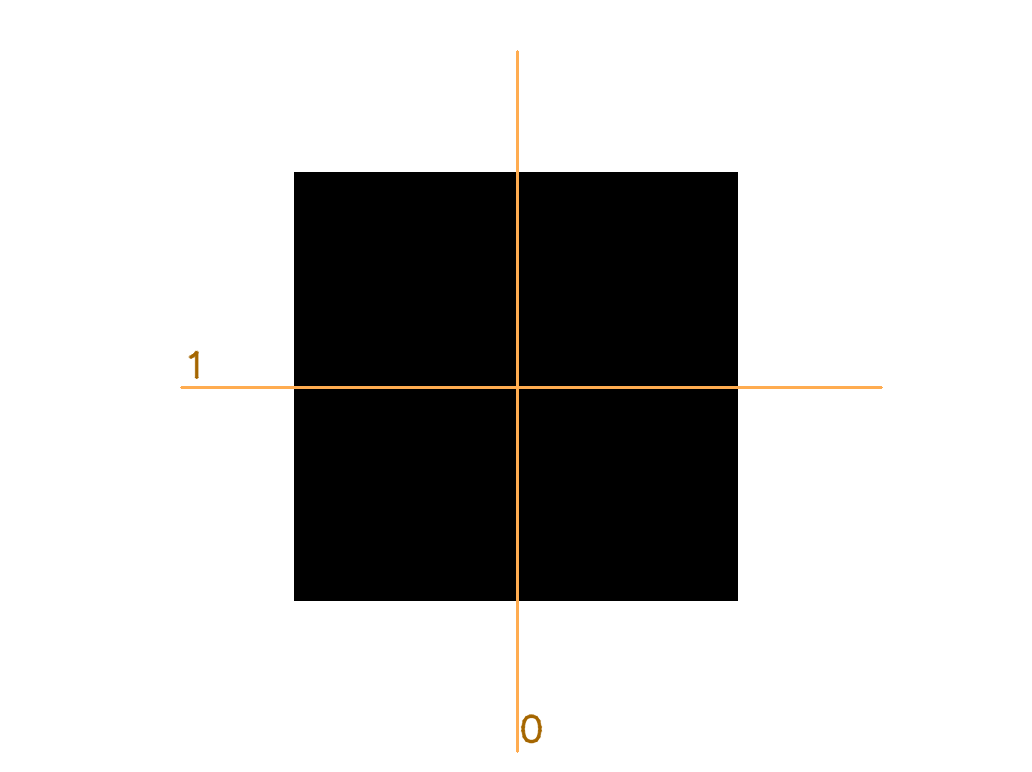

We define the goal state for each class of garment as a sequence of folding lines on top of a template mask. Besides their order, the folding lines also contain information about the direction of the fold. The template mask itself is fitted to a segmented image of the smoothed garment using particle swarm optimization.

T-Shirt

Towel

We've collected a dataset of over 4400 real-world actions, either human-annotated or through self-supervision. The complete dataset can be downloaded here (15.2GB). Camera images are stored in the collection directory, and are grouped by their respective episode_id. Both color and depth images are saved as *.png files; parameters for the depth encoding are available in the repository. Additionally, we provide the segmented mask of the garment as well as orthographic projections of all images, resulting in six images per action in total. All action information is stored in the SQLite database in the root directory, in particular in the actions table. Each action has an episode_id, an integer action_id and a json data column. Amongst others, each action contains a type field and two planar poses with a x, y, theta coordinate relative to the image dimension. In contrast to human-annotated actions (), self-supervised ones () have a numeric reward field (that might also be calculated from the before/after images and a trained Ready to Fold predictor). Here are some examples actions:

More information can be found in our repository.